My demo with Google’s AR glasses went better than the one on stage

I got hands-on time with Google’s prototype AR glasses at Google I/O 2025. While they had some technical difficulties and lag during the live keynote demo, these wireless glasses impressed me in a way I didn’t expect during my brief press demo.

Google hasn’t announced any plans to sell these AR glasses — a reference design co-created by Samsung — but it’s enlisted Warby Parker and Gentle Monster to design stylish, Gemini-powered smart glasses in the future.

Google’s Android XR glasses have no tether or puck, and they felt surprisingly light and svelte compared to other AR glasses like Meta Orion.

It had only one display in the right lens, but to my surprise, this didn’t end up bothering me. Dual displays may be the future of Android XR, but I’m fine with Google bringing back the Google Glass style for now.

Google had nothing to share on specs like weight or battery life, unsurprisingly. But the Android XR prototype glasses leave me convinced that Google might actually pull AR glasses off. And at the very least, its non-holographic smart glasses are going to be a big deal.

Might as well call them Gemini glasses

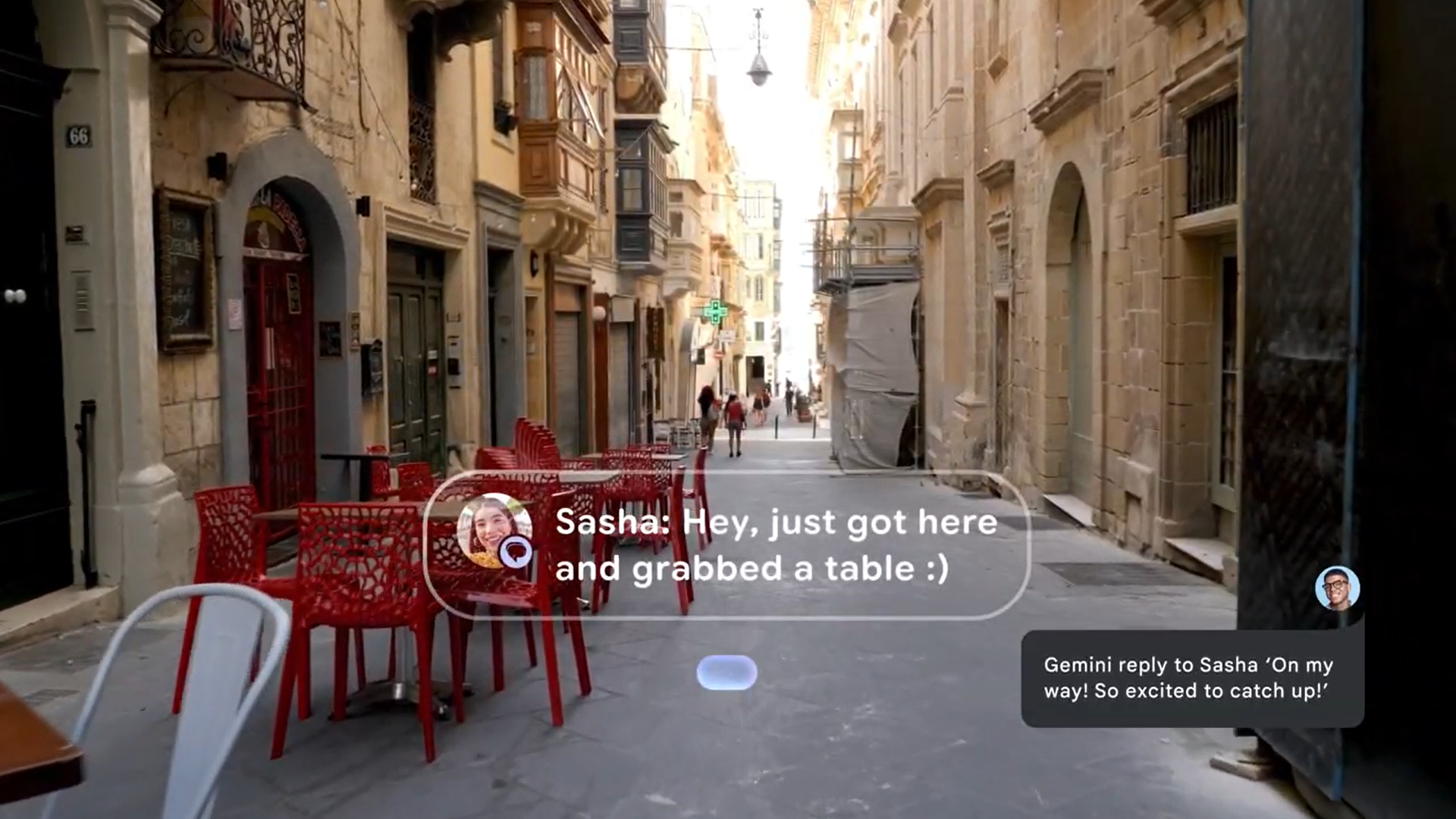

My Android XR demo booth was full of art, books, and other visual content for Gemini to analyze. You activate the multimodal assistant by pressing and holding the right temple side, which has a touch area. It then starts analyzing and remembering your surroundings until you tap and hold it again.

I looked at a book of “epic hikes” and asked Gemini to recommend one in the Bay Area where I live; it pulled info from that book and pointed me to Yosemite, which is a bit of a drive but still relevant context.

I then looked at a painting, had Gemini summarize its artist and history, and then compared its themes against the painting next to it. Gemini complied happily; it’s the kind of thing you’d expect if you use Gemini Live on your Android phone, except almost entirely hands-free.

With the holographic display, you see relevant info in response to your Gemini commands. That said, I can already envision how it would work without a display, since Google said during the keynote it would be “optional.” This would let Google and Samsung sell cheaper Android XR smart glasses that compete directly with Meta Ray-Ban glasses.

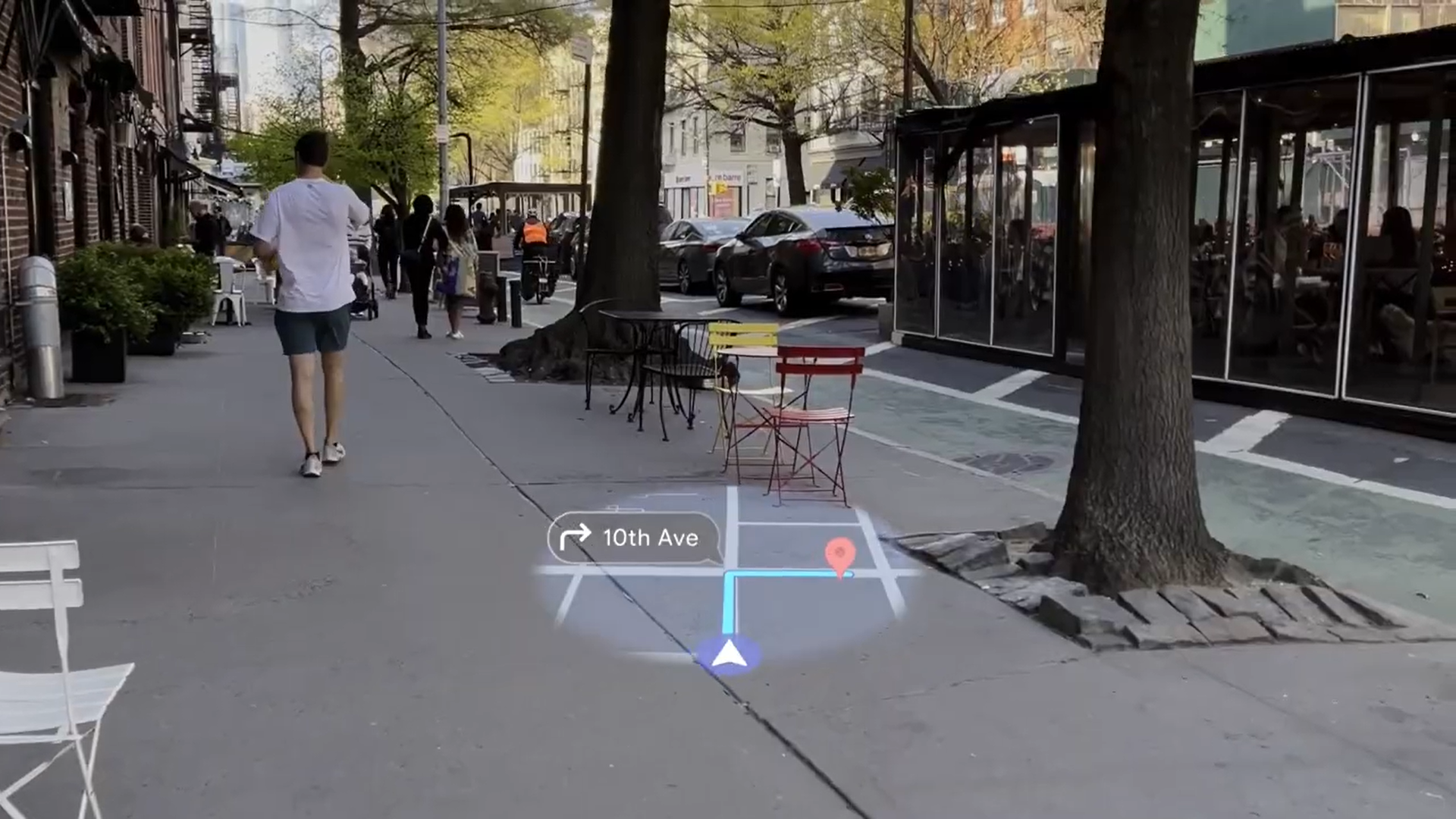

My immediate favorite Android XR feature was Google Maps. They had a destination pre-loaded for me to walk out of Shoreline, but what fascinated me was how it showed a simple arrow and street name pop-up while looking forward, but switched seamlessly to a live map view if I looked downward (see the gallery above).

Other Android XR apps were more straightforward, showing Calendar reminders or Messages pop-ups in the bottom portion of my vision. But changing the HUD content based on where you’re looking is a simple but excellent idea; I could imagine a Fitbit app showing HR and pace normally during a run but adding more data if you stop and look down, for instance.

The mono-display style works

Just like the original Google Glass, this Android XR prototype has a single display, though Google has said the OS works for dual-display glasses, too.

I can’t speak to exact field of view (FoV), resolution, or brightness. The blue “I’m listening” Gemini line had a tiny bit of blurring around the edge, as does small text. But when the glasses display a full-size message or app pop-up, I had no trouble reading it.

Equally important, it’s carefully placed so that (most of the time) it doesn’t block your vision. Some actions like taking a photo do dominate center view, but everything else is carefully placed so that you can see the info while going about your day.

I’ve tried AR glasses in the past where the FoV is so small and the HUD so awkwardly placed that I struggle to perch the glasses properly to even see the content. It’s especially difficult to find the sweet spot on dual-display AR glasses, in my experience.

That’s why I didn’t mind a monocular display. Google prioritizes visibility in one eye and doesn’t have to bulk up the size to fit a second. It only took me about five seconds to perch my Google glasses in the sweet spot, and then I didn’t have to make any adjustments.

Meta allegedly plans to sell its single-display “Hypernova” glasses later in 2025, so it’ll be interesting to see how Android XR glasses match up against a Ray-Ban design and Meta AI assistant for usability and subtlety.

Blending in as “normal” glasses

These glasses work “in tandem” with your Android phone, which essentially means these Google AR glasses will only be as fast as your phone’s NPU allows. The glasses will process visual data from the cameras and your audio commands from the mic, then pass that info along for your phone to do the heavy lifting.

We’ll have to see what kind of battery life impact that has on your phone, but at the very least, it ensures that the glasses themselves don’t need to be especially massive for on-device processing.

The glasses’ temples are on the tall side, but they match the frames’ thick aesthetic, so people might assume it’s a stylistic choice until the camera LED turns on. Their look and size remind me a lot of Meta’s Project Aria Gen 2 glasses, another research prototype. And those lack any holographic tech.

More importantly, Google’s AR glasses felt comfortable to wear, and they were not too different from my Ray-Bans. I’m sure they’d slide down my nose from the weight if not properly fitted, and it’s hard to judge weight fatigue in a quick demo period, but in theory, I could see myself wearing them all day without complaint. And that’s really important for AR glasses to pull off, as much as performance and battery.

I’m excited to see Gentle Monster and Warby Parker take their shot at Android XR glasses, and hypothetically see Google AR and smart glasses available in regular eyewear stores in the future.

Overall, I don’t want to read too much into a brief, carefully curated demo. I’m sure a few days with these glasses would show more pain points, such as battery life. But reasonable skepticism aside, I’m more excited about Android XR than I was going into Google I/O.

If Google can make a consumer-viable version of this reference design, I think it has a chance to outperform Google Glass.

Post Comment